Can You Close the Performance Gap Between GPU and CPU for Deep Learning Models? - Deci

Por um escritor misterioso

Descrição

How can we optimize CPU for deep learning models' performance? This post discusses model efficiency and the gap between GPU and CPU inference. Read on.

Is there a way to Train simultaneously on CPU and GPU? (e.g. 2 separate neural network models) - Quora

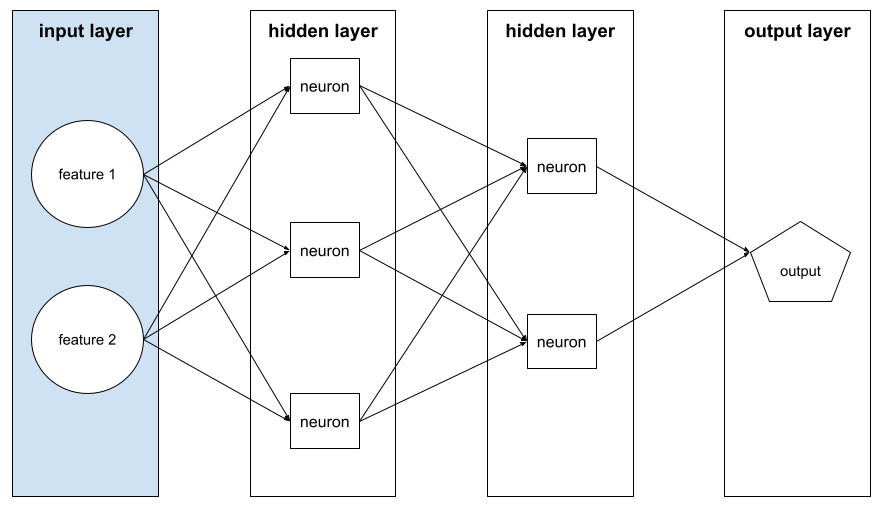

How GPUs Accelerate Deep Learning

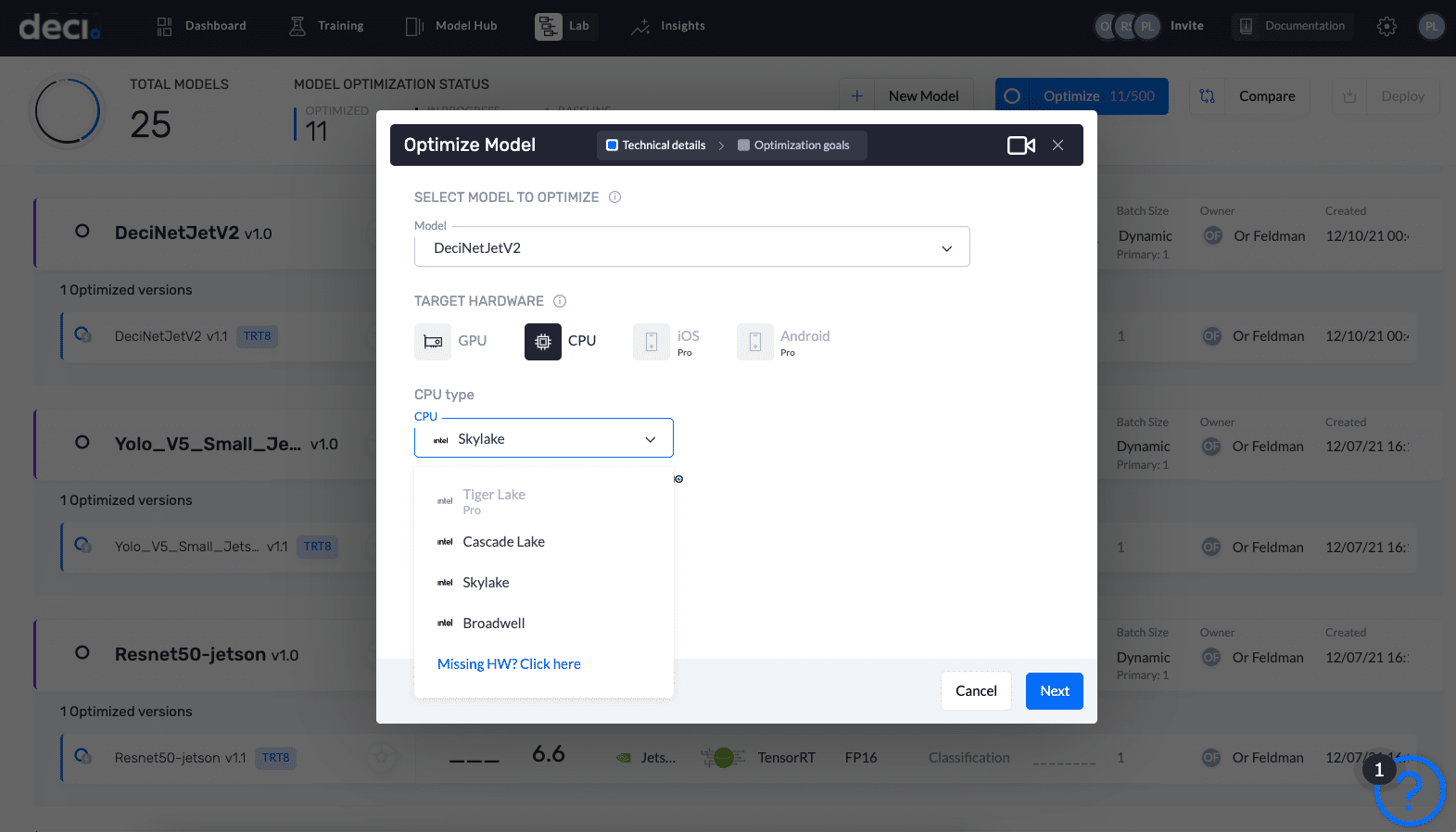

Deci's New Family of Models Delivers Breakthrough Deep Learning Performance on CPU

Deep Learning with Spark and GPUs

DeciNets AI models arrive with Intel CPU optimization

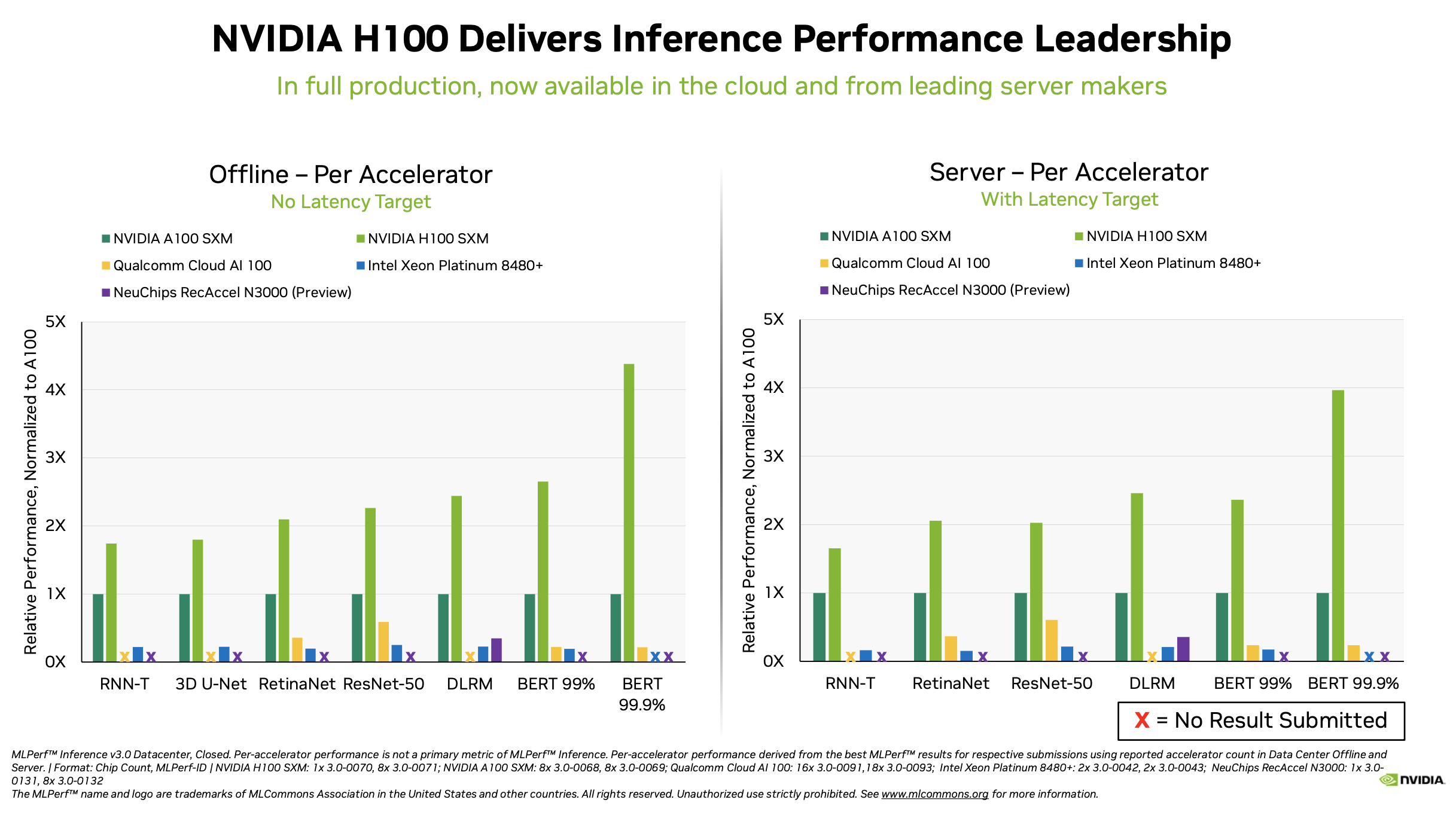

NVIDIA TensorRT-LLM Supercharges Large Language Model Inference on NVIDIA H100 GPUs

Measuring Neural Network Performance: Latency and Throughput on GPU, by YOUNESS-ELBRAG

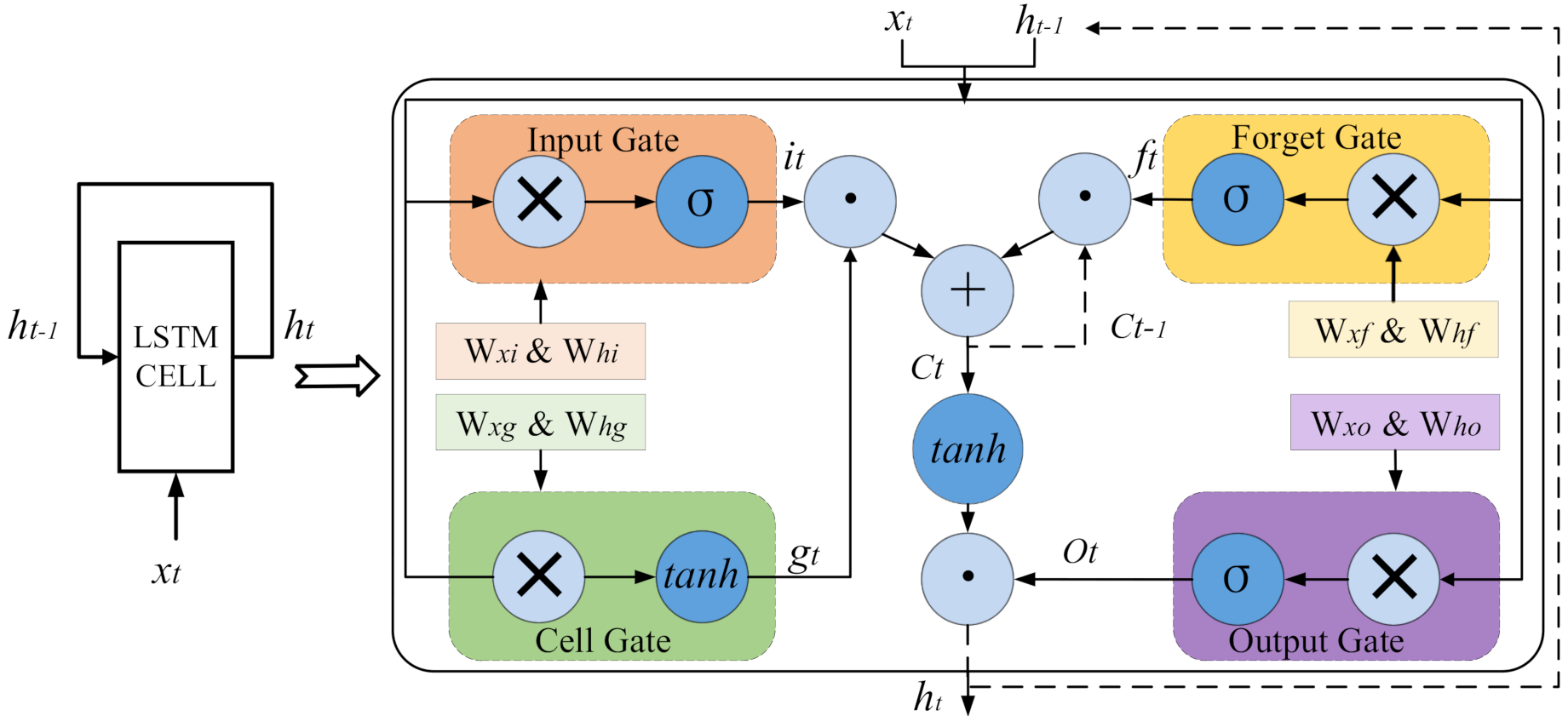

Machine Learning Glossary

Electronics, Free Full-Text

Can You Close the Performance Gap Between GPU and CPU for Deep Learning Models? - Deci

MLPerf Inference 3.0 Highlights - Nvidia, Intel, Qualcomm and…ChatGPT