vocab.txt · nvidia/megatron-bert-cased-345m at main

Por um escritor misterioso

Descrição

We’re on a journey to advance and democratize artificial intelligence through open source and open science.

2204.02311] PaLM: Scaling Language Modeling with Pathways

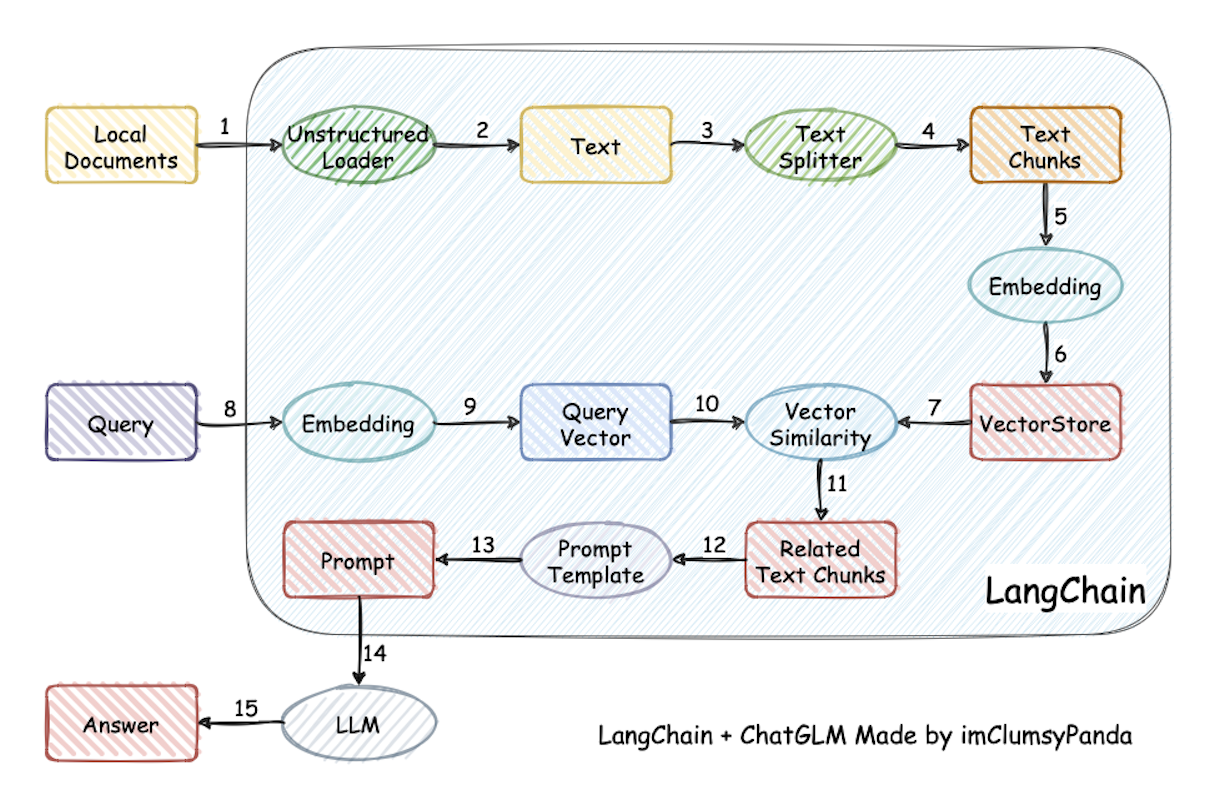

PDF] BioReader: a Retrieval-Enhanced Text-to-Text Transformer for Biomedical Literature

Deep lexical hypothesis: Identifying personality structure in natural language.

Feedgrid

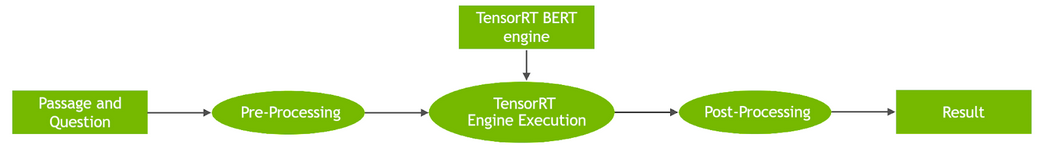

Real-Time Natural Language Understanding with BERT Using TensorRT

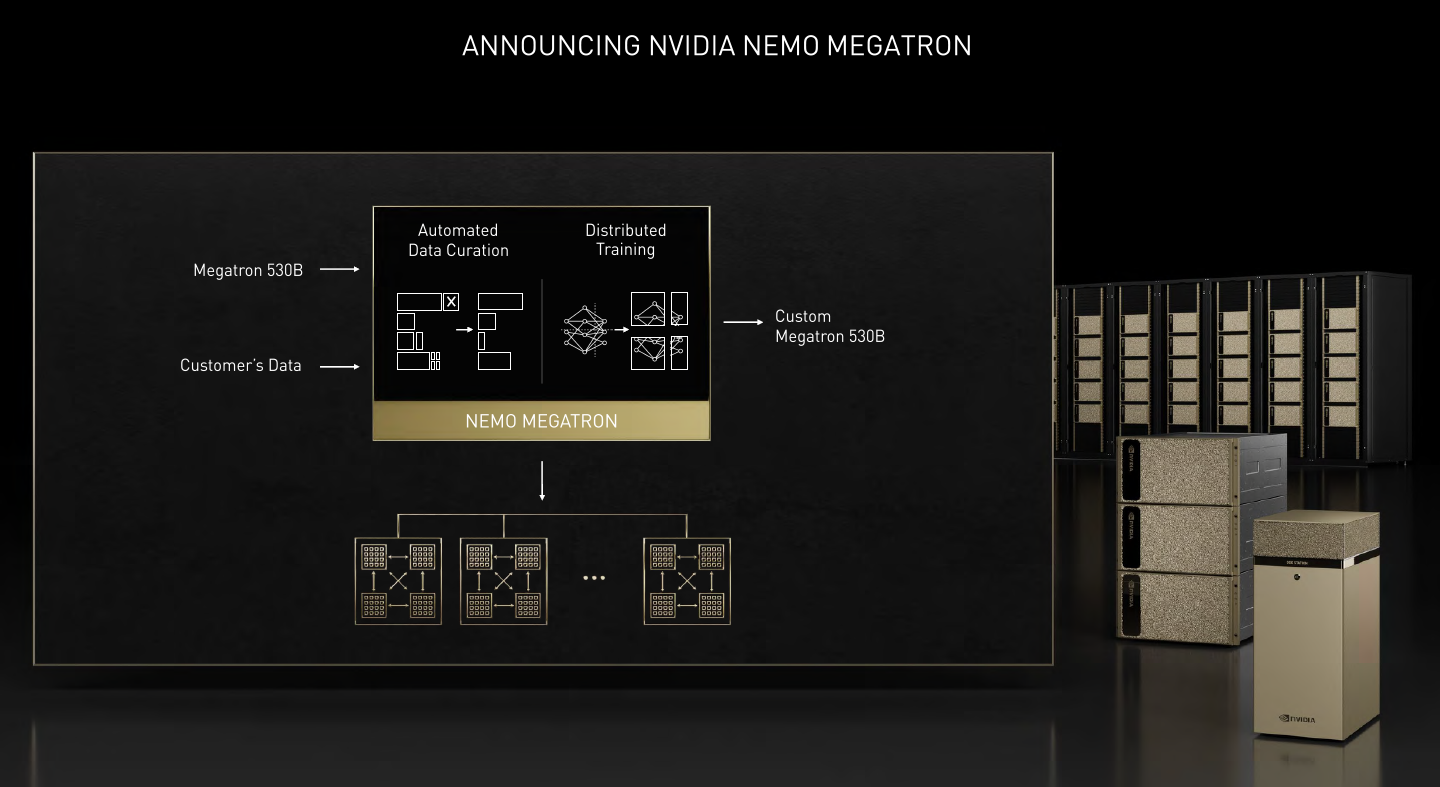

Nvidia Debuts Enterprise-Focused 530B Megatron Large Language Model and Framework at Fall GTC21

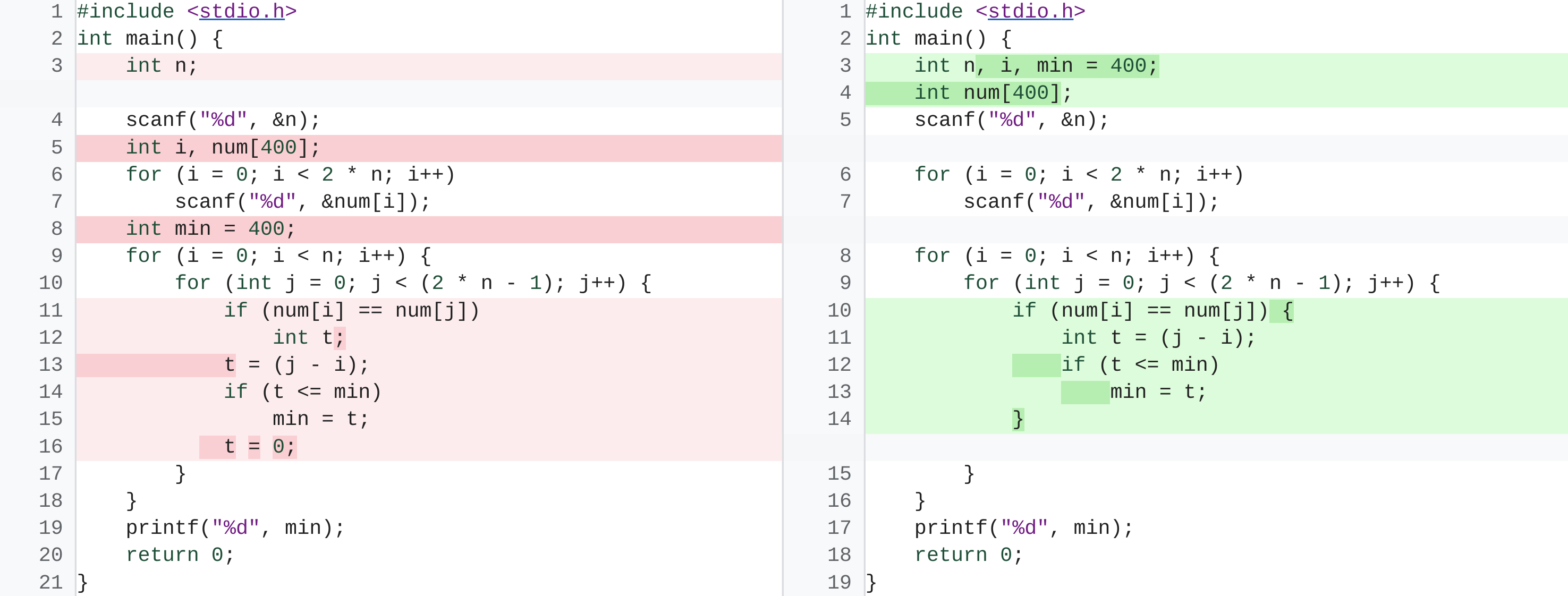

Real-Time Natural Language Understanding with BERT Using TensorRT

Mastering LLM Techniques: Training

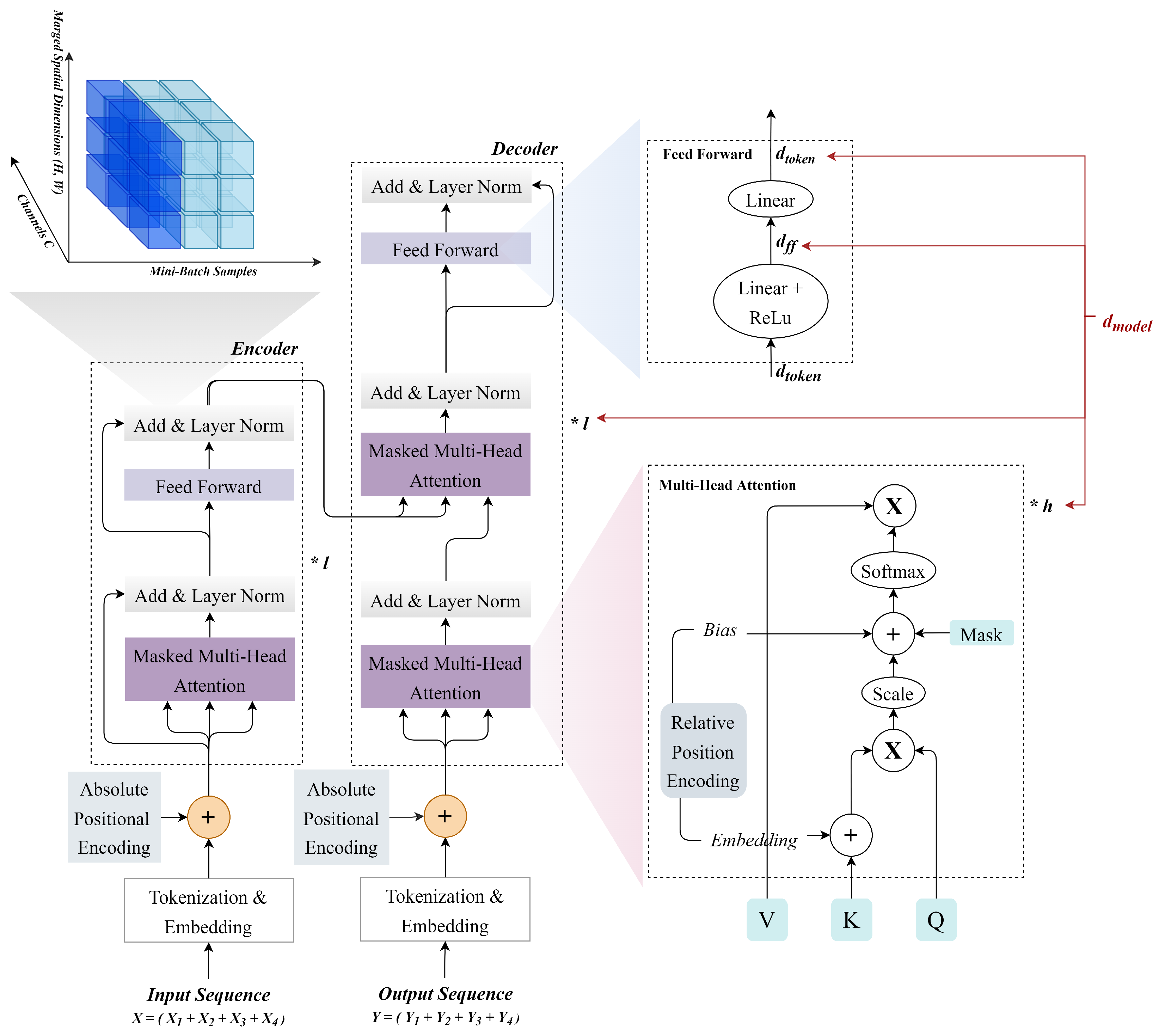

Machine Translation Models — NVIDIA NeMo

Brief Review — Megatron-LM: Training Multi-Billion Parameter Language Models Using Model Parallelism, by Sik-Ho Tsang

AMMU: A survey of transformer-based biomedical pretrained language models - ScienceDirect

bert-base-uncased-vocab.txt' not found in model shortcut name list · Issue #9 · TencentYoutuResearch/PersonReID-NAFS · GitHub

Building State-of-the-Art Biomedical and Clinical NLP Models with BioMegatron

GitHub - EleutherAI/megatron-3d

AI, Free Full-Text