Computing GPU memory bandwidth with Deep Learning Benchmarks

Por um escritor misterioso

Descrição

In this article, we look at GPUs in depth to learn about memory bandwidth and how it affects the processing speed of the accelerator unit for deep learning and other pertinent computational tasks.

In this article, we look at GPUs in depth to learn about memory bandwidth and how it affects the processing speed of the accelerator unit for deep learning and other pertinent computational tasks.

In this article, we look at GPUs in depth to learn about memory bandwidth and how it affects the processing speed of the accelerator unit for deep learning and other pertinent computational tasks.

NVIDIA GeForce RTX 4090 vs RTX 3090 Deep Learning Benchmark

Improving GPU Memory Oversubscription Performance

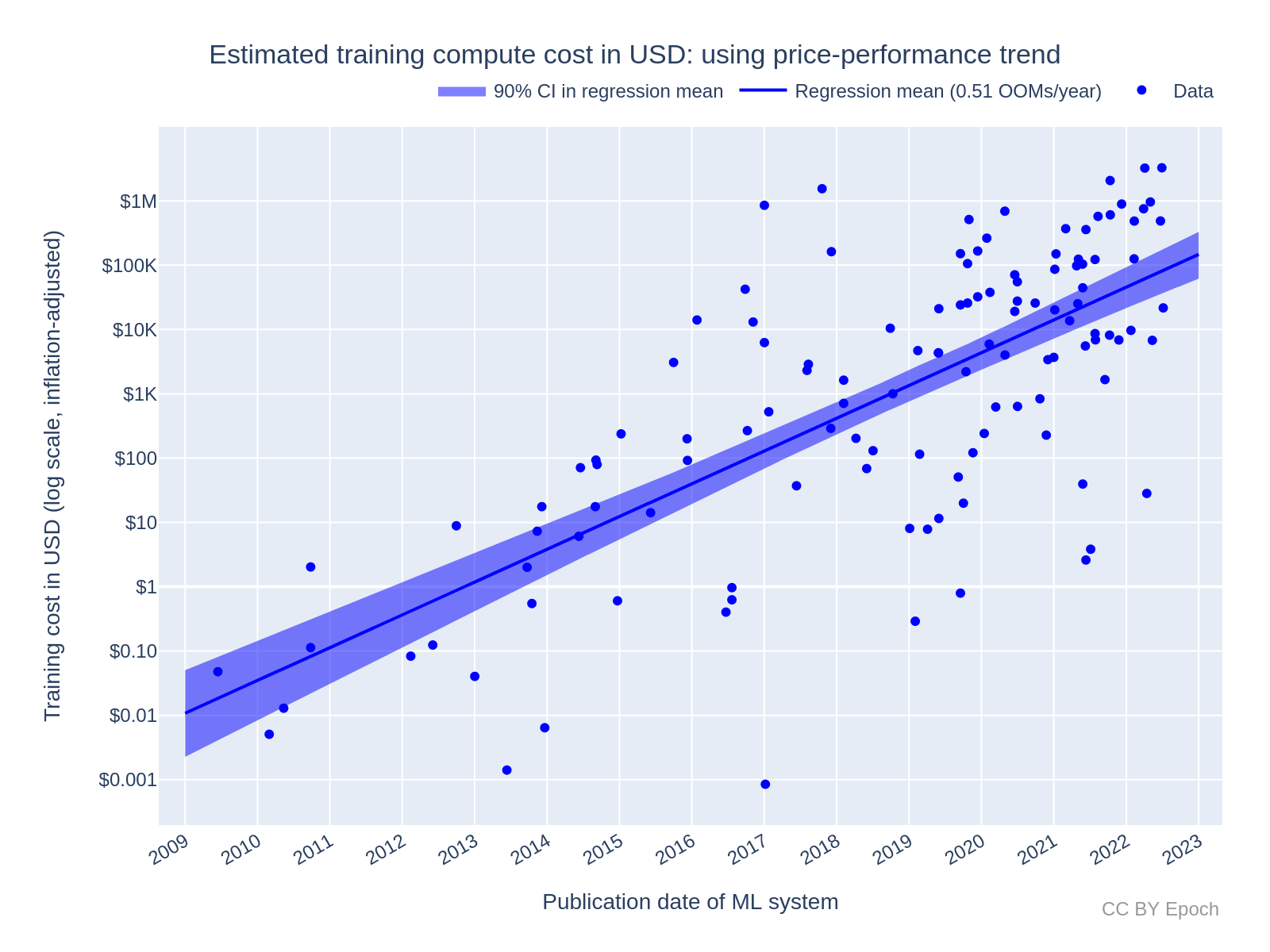

Trends in the Dollar Training Cost of Machine Learning Systems – Epoch

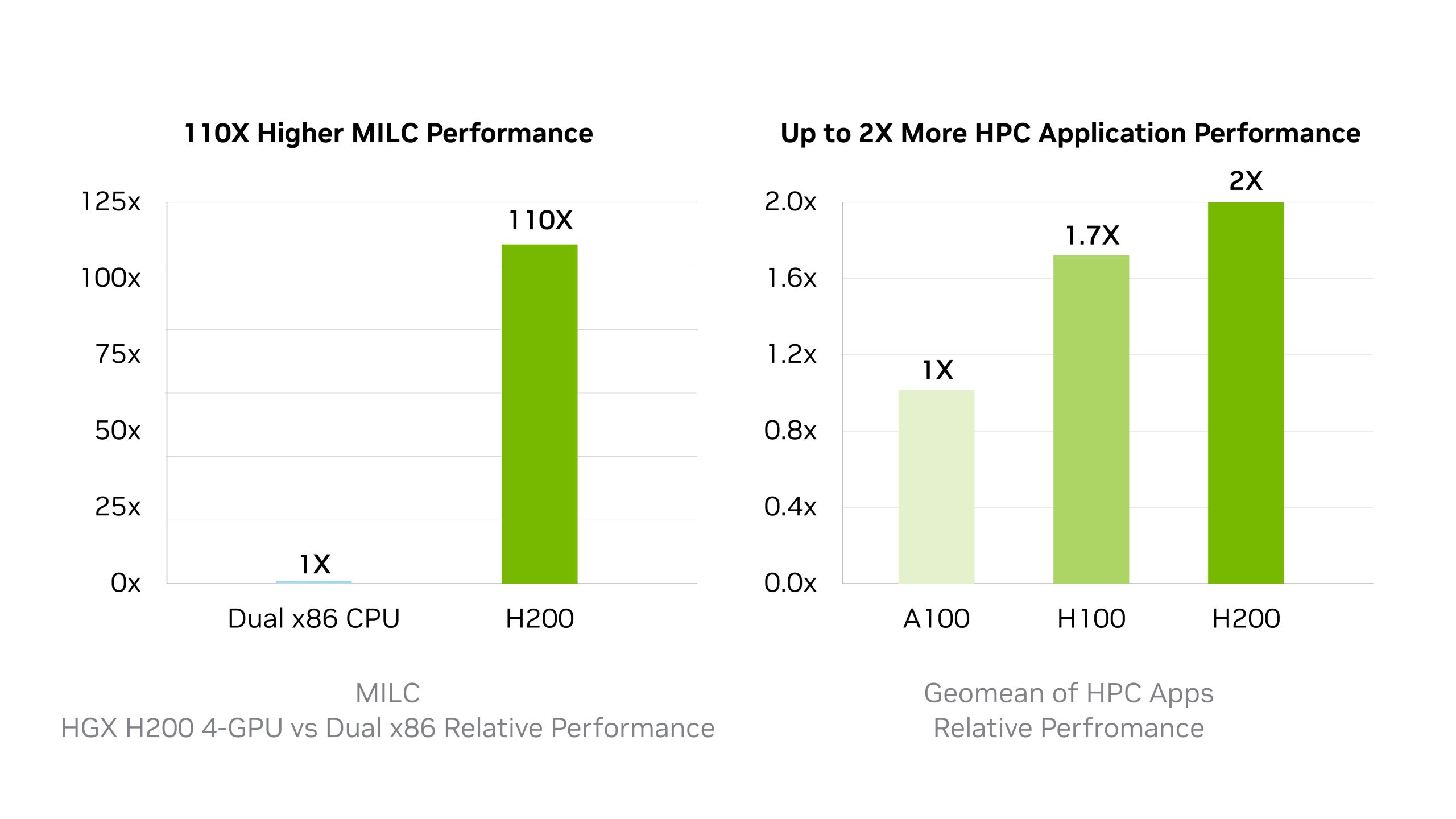

Nvidia launches the Hopper H200 GPU with 141GB of HBM3e memory

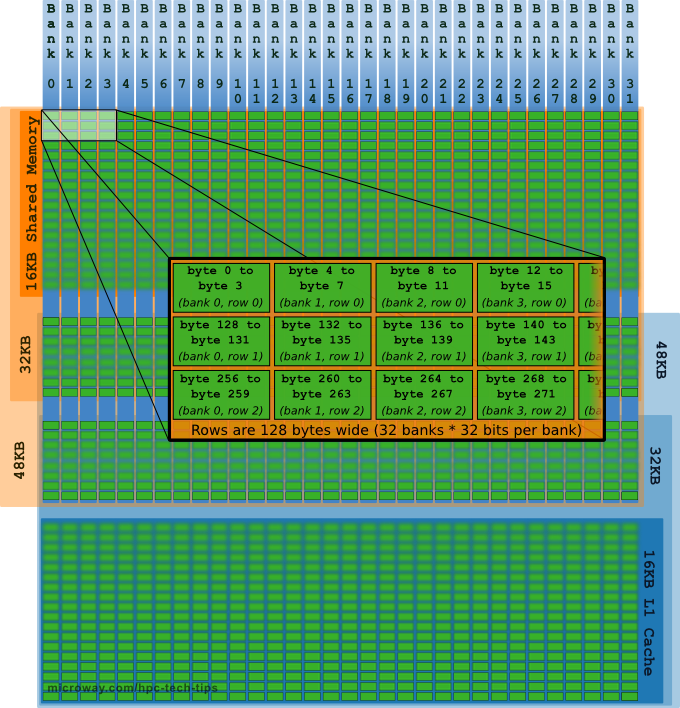

GPU Memory Types - Performance Comparison - Microway

Machine learning mega-benchmark: GPU providers (part 2)

Training LLMs with AMD MI250 GPUs and MosaicML

High Bandwidth Memory Can Make CPUs the Desired Platform for AI

The Best GPUs for Deep Learning in 2023 — An In-depth Analysis

Profiling and Optimizing Deep Neural Networks with DLProf and

PDF] GPU-STREAM: Benchmarking the achievable memory bandwidth of

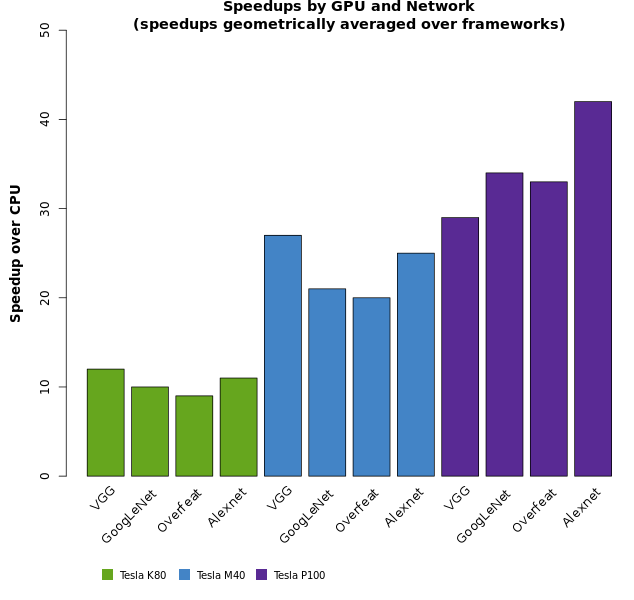

Deep Learning Benchmarks of NVIDIA Tesla P100 PCIe, Tesla K80, and

Benchmarking Large Language Models on NVIDIA H100 GPUs with

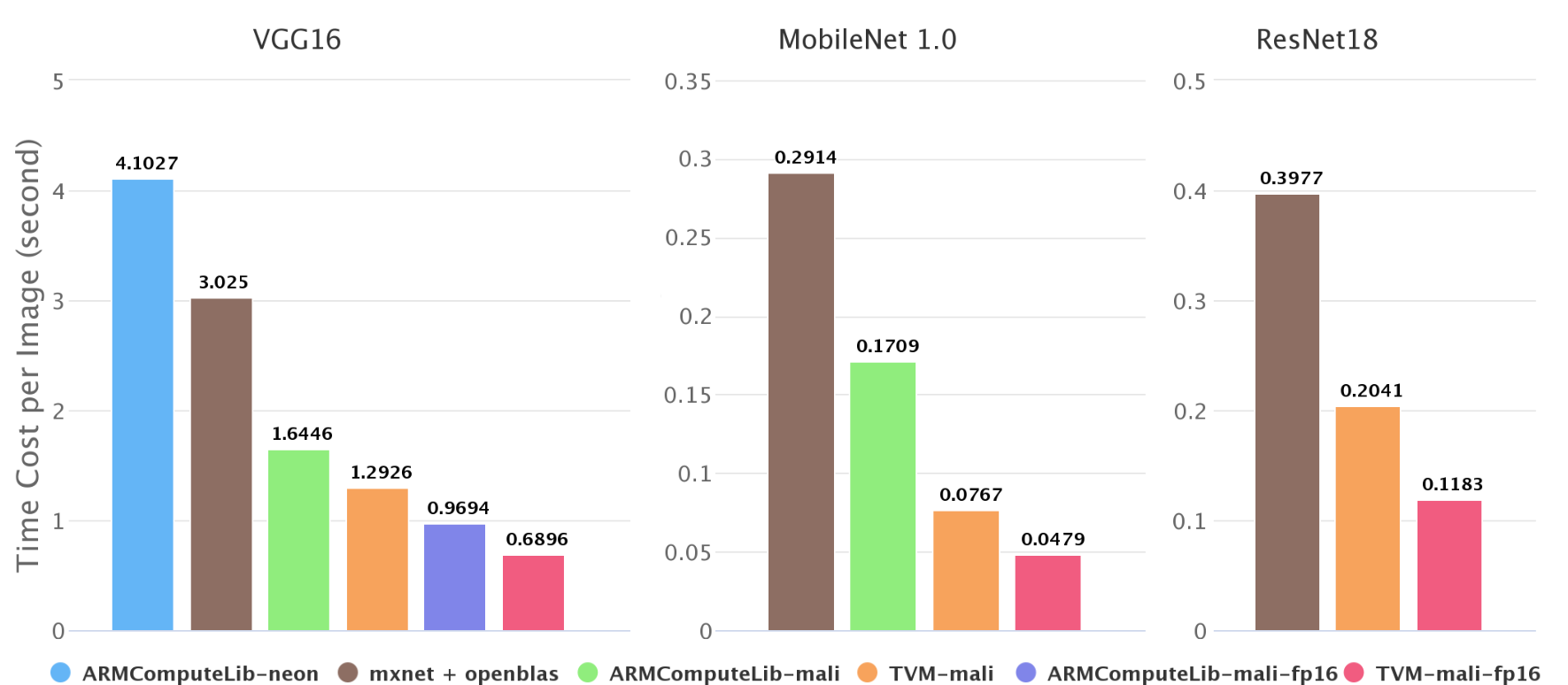

Optimizing Mobile Deep Learning on ARM GPU with TVM

DeepSpeed: Accelerating large-scale model inference and training